|

ENGINEERING HYDROLOGY: CHAPTER 072 - REGRESSION ANALYSIS

1. BIVARIATE NORMAL DISTRIBUTION

1.01

The bivariate normal distribution is the foundation of regression theory.

1.02

The bivariate normal distribution is:

1.03

1.04

in which x and y are the random variables.

1.05

The parameters K and M are a function of the means μx and μy,

the standard deviations σx and σy, and the correlation

coefficient ρ.

1.06

The conditional distribution is obtained by dividing the bivariate normal

by the univariate normal, to yield:

1.07

1.08

As in the case of the bivariate normal, for the conditional normal,

the parameters K' and M' are a function of the means,

the standard deviations, and the correlation

coefficient.

1.09

The conditional normal distribution has the following mean:

1.10

1.11

This equation expresses the linear dependence between x and y.

1.12

The slope of the regression line is:

1.13

1.14

The conditional normal distribution has the following variance:

1.15

1.16

The correlation coefficient ρ is the fraction of the original variance

explained or removed by the regression.

1.17

For ρ = 1, all the variance is removed.

1.18

For ρ = 0, all the variance remains.

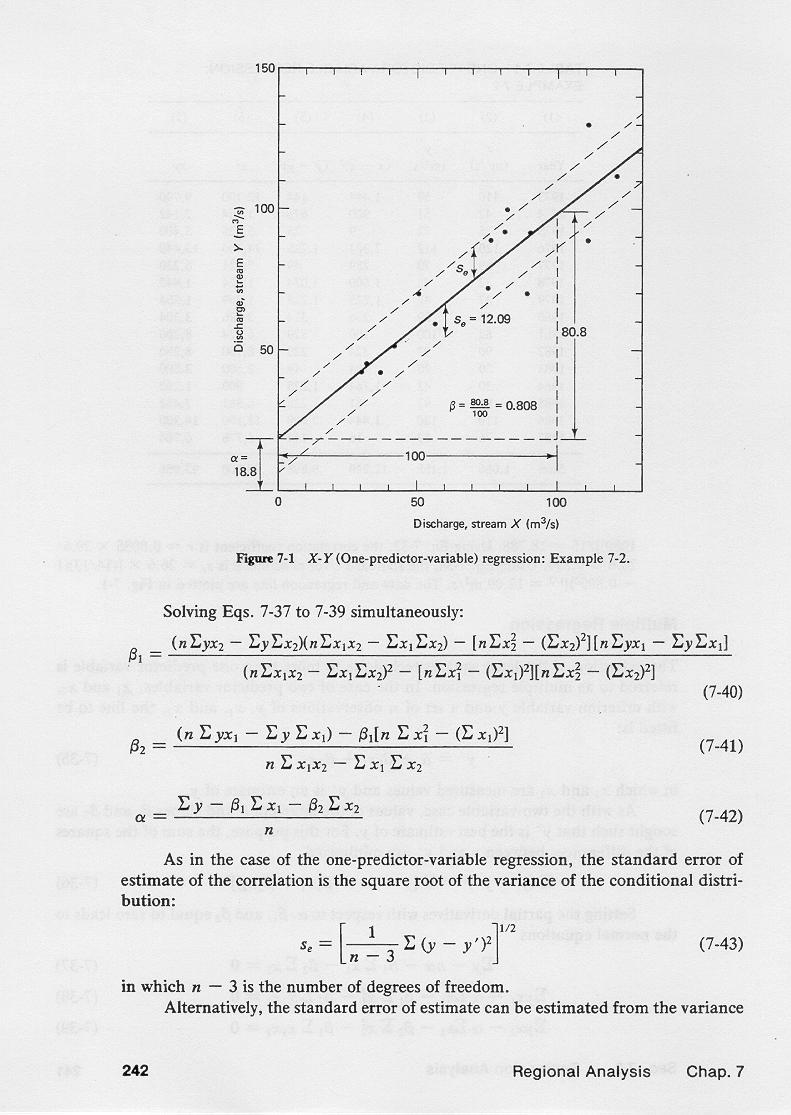

2. ONE-PREDICTOR-VARIABLE REGRESSION

2.01

Assume two or more random variables that are related.

2.02

The variable for which values are given is called the predictor variable.

2.03

The variable for which values must be estimated is called the

criterion variable.

2.04

The equation relating criterion and predictor variables is the

prediction equation.

2.05

The objective of regression analysis is to evaluate

the parameters of the prediction equation.

2.06

Correlation provides a measure of the goodness of fit

of the regression.

2.07

Therefore, while regression provides the parameters of the prediction

equation, correlation describes its quality.

2.08

This distinction is necessary because the predictor and criterion variables

cannot be switched, unless the correlation coefficient is equal to 1.

2.09

In hydrologic modeling, regression analysis is useful

in model calibration; correlation is useful in model formulation

and verification.

2.10

The principle of least squares is used in regression analysis

as a means of obtaining the best estimate of the parameters

of the prediction equation.

2.11

The principle is based on the minimization of the sum of the squares

of the differences between observed and predicted values.

2.12

The procedure can be used to regress one criterion variable

on one or more predictor variables.

2.13

In one-predictor-variable regression, the line to be fitted has the following form:

2.14

2.15

The sum of the squares of the differences between y and y' are minimized.

2.16

| ∑ (y - y')2 = ∑ [y - (α + β x)]2 |

|

2.17

This leads to the parameters of the regression:

2.18

∑xy - (∑x ∑y)/n

∑x2 - (∑x)2/n

|

2.19

2.20

Since the slope of the regression line is

2.21

2.22

the estimate from sample data is:

2.23

2.24

Therefore, the correlation coefficient is:

2.25

2.26

The standard error of estimate is the variance of the conditional

distribution.

2.27

For calculations based on sample data, the standard error of estimate is:

2.28

2.29

2.30

The regression equations can be used to fit power functions of the type:

2.31

2.32

This equation is linearized by taking the logarithms:

2.33

2.34

With u = log x, and v = log y, this equation is:

2.35

2.36

Replacing x and y for u and v in the equations for the regression parameters,

leads to:

2.37

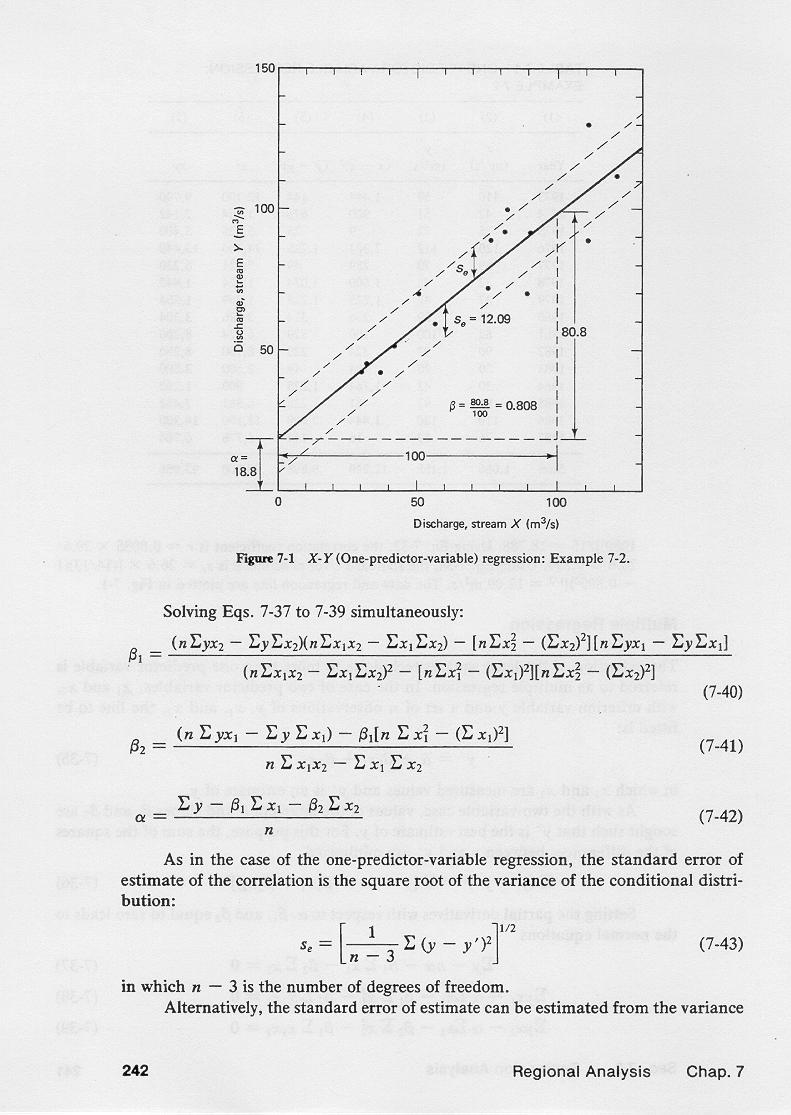

3. MULTIPLE REGRESSION

3.01

Multiple regression is the extension of the least squares technique

to more than one predictor variable.

3.02

For the case of two predictor variables, the line to be fitted is:

3.03

3.04

The sum of the squares of the differences between

the criterion variable y and its estimate y' is:

3.05

| ∑ (y - y')2 = ∑ [y - (α + β1 x1 + β2 x2)]2 |

|

3.06

The minimization of the sum of the squares leads to equations for

α, β1, and β2, as a function of the

predictor variables x1 and x2 and criterion variable y.

3.07

(n∑yx2 - ∑y∑x2)(n∑x1x2 - ∑x1∑x2)

- [n∑x22 - (∑x2)2][n∑yx1 - ∑y∑x1]

(n∑x1x2 - ∑x1∑x2)2 - [n∑x12 - (∑x1)2][n∑x22 - (∑x2)2]

|

3.08

(n∑yx1 - ∑y∑x1)

- β1[n∑x12 - (∑x1)2]

n∑x1x2 - ∑x1∑x2

|

3.09

3.10

The multiple regression equations can be used to fit power functions of the type:

3.11

3.12

This equation is linearized by taking the logarithms:

3.13

| log y = log a + b1 log x1 + b2 log x2 |

|

3.14

With u = log x1, v = log x2, and w = log y, this equation is:

3.15

3.16

Replacing x1, x2, and y for u, v and w in the equations for the regression parameters,

leads to:

3.17

Narrator: Victor M. Ponce

Music: Fernando Oñate

Editor: Flor Pérez

Copyright © 2011

Visualab Productions

All rights reserved

|